Introduction

This joint effort between Distant Focus Corporation and Dr. Mark Neifeld of the University of Arizona was supported by the Microsystems Technology Office (MTO) office within DARPA. (Program manager – Dr. Ravindra Athale). The program was originally called the Integrated Computational Imaging System (ICIS) and was the prelude to the MONTAGE program.

This project involved the construction of an array of 128 cameras for demonstrating special algorithms. The main idea is to use a set of low resolution detectors distributed randomly on a surface to form a higher resolution image as well as collecting more light than an individual sensor could. This design has several potential advantages over conventional imaging, scalability, low aspect ratio (flat camera), and high fault tolerance with graceful image degradation if individual sensors are damaged.

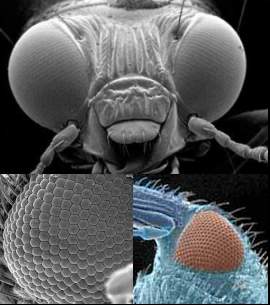

Multiple Aperture Vision Systems

Image courtesy of the Institute of Medical Sciences at the University of Aberdeen

We are all familiar with the conventional single aperture camera, a design that has served society's photographic needs for well over a century. However, nature provides numerous examples of creatures that rely on multiple aperture vision systems. For example, the compound eye of a dragonfly contains over 30,000 lens enabling it to gather detailed information from its surroundings. Is there an advantage to this format? Obviously, transferring the dimensions of this system to a camera would open opportunities previously unexplored.

The nature of this investigation is to implement a distributed imaging algorithm, that is, to fuse images obtained from a set of low resolution sensors in order to obtain a higher resolution view of a scene. With this multiple aperture platform we examined the issues of building a practical system and investigated how visual performance is enhanced.

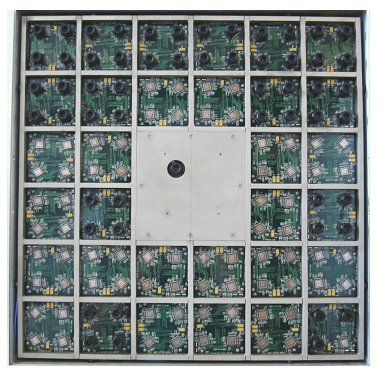

Distributed Imaging Demonstration Platform

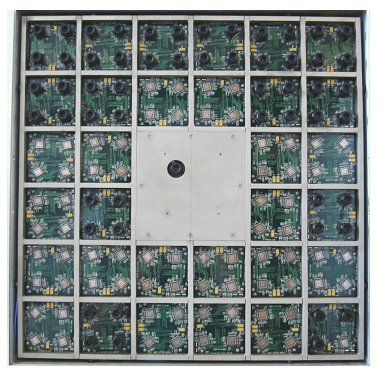

The camera array contains 129 monochrome digital CMOS image sensors controlled by a PC104 computer. Fifty-nine of the image sensors have a 5.6mm focal length lens attached. The algorithm is run from a remote workstation/laptop that uses a TCP/IP network connection to transfer control and data information. The PC104 computer and its interface to the 32 acquisition boards is located behind the central plate.

Using this platform, we have demonstrated enhanced resolution, that is, the resolution of the composite image exceeds that of the individual sensors. The system also displays foveation, regions of higher resolution that are user selectable.

The platform illustrates three important new features:

- Integrated imaging and processing systems provide features that can not be readily achieved in a conventional package. (Enhanced resolution and foveation)

- The aspect of the camera framework can be built much flatter, allowing even conformal designs.

- Large numbers of integrated, inexpensive sensors can provide performance that exceeds a higher cost, single element solution.

Hardware

Acquisition Electronics

An acquisition module is comprised of two boards – an image sensor board (left) and a digital interface (right). There are 32 boards in the system providing 128 monochrome image sensors. The sensors are rotated relative to each to increase the diversity of the view.

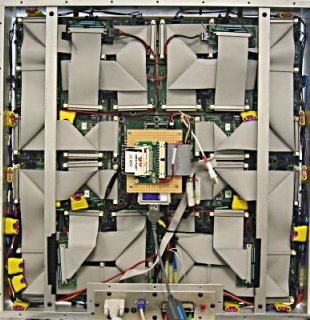

PC104 Computer Control, Interface Electronics and Data Bus

The PC104 computer and interface electronics control the camera array (left). Four ribbon cables provide connectivity between the interface and the acquisition modules (right).

Optics

Lenses are attached directly to the sensor cover plate (right). The optics are 5.6mm focal length multi-element lenses. Fifty-six out of the 128 sensors were configured with optics. The remainder will be used for new investigations.

Mechanical Framework

An Invar frame was constructed to assure stability of the camera array configuration.

Integrated System

Completed camera array; 54 of the 128 cameras have been populated with lenses.

Algorithim

The basic Distributed Imaging Algorithm

- A collection of low resolution images are acquired.

- An affine transformation is applied to each image so that the set is mutually registered.

- The full set of aligned low resolution images are back projected onto a common high resolution grid. This merger becomes the initial high resolution estimate.

- A subset of the low resolution images are selected. A camera model is calculated from the high resolution estimate to match the expected sampling, blurring, and transformation due to a specific sensor. The difference between the models and the images are measured and averaged. A specified fraction of this difference is used to correct the high resolution estimate.

- The process of comparing models against new subsets of low resolution images and updating the high resolution estimate is iterated. The process significantly reduces the mean error between the estimate and the scene.

The target object – an Air Force resolution chart |

Registration – The orientation of the camera determines how the image is formed. Typically, an affine transformation will describe the relative observations. |

Optical blurring and pixel layout degrade the image. In addition, sensor noise, quantization, and dynamic range can further reduce the fidelity of the scene. |

Sensor sampling reduces the available resolution. |

How does the algorithm aid imaging?

The results indicate that N2 low resolution image sensors can produce about the same performance as a single high resolution sensor with N times the resolution along each dimension. This indicates that a sufficiently large collection of sensors can produce images that exceed the resolution of state-of-the-art and yet to be developed systems. Potential applications could include inexpensive high resolution cameras, wide-angle surveillance with embedded high resolution regions (foveation), cameras with a planar profile, and so on.