What happens when we have placed so many sensors around us that we can no longer decide what we're looking for; we're so busy sorting out good information from the useless, integrating data from multiple sensors, that we forget our mission? What if sensors could be built that are "smart"? What if these "smart" sensors could make a reasonable determination of what is interesting, and what is background noise?

Introduction and Project History

The Defense Advanced Research Projects Agency (DARPA ATO) funded the University of Illinois at Urbana-Champaign (UIUC) to study "Tomographic Imaging on Distributed Unattended Ground Sensor Arrays" in late summer of 2000. This research was proposed and directed by Dr. David Brady and was carried out by the Photonic Systems group at the Beckman Institute whose core members later formed Distant Focus. The project was named MEDUSA, which stands for "Mobile Distributed Unattended Sensor Arrays". This research topic is a by-product of an integrated multi-camera / supercomputer project called Argus, a 3-D imaging and distributed processing environment, as well as other research projects pursued by the group focusing on embedded computing. A prototype hardware platform was designed and developed consisting of 6 wireless networked modules. Distant Focus then sub-contracted with UIUC to complete the project in the spring of 2001 due to the transition of Dr. Brady from UIUC to Duke University.

Program Focus for Distant Focus

The goal of this DARPA program was to develop unattended sensors and sensor arrays that accurately and robustly track targets using 3-D tomographic techniques. In principle, the more information the sensor array detects, the easier this task becomes. For example, multi-modal sensing of acoustic, optical, magnetic and seismic information and spatially distributed arrays provide diverse information and should improve system performance. In practice, the processing power needed to fuse these data streams could overwhelm system resources. This program showed that tomographic methods helped achieve multi-sensor data fusion more efficiently than higher level approaches and that the tomographic algorithms can be implemented on arrays with modest processing capabilities.

Distant Focus Corporation's contribution was to complete both hardware and software for the first generation sensor modules, design and develop a second generation sensing and processing module, develop tomographic tracking algorithms, and create a user interface for data analysis. In addition, Distant Focus extended web based control interfaces to both laptop and personal digital assistants (PDA). Shown below are several of the features implemented by the Distant Focus team.

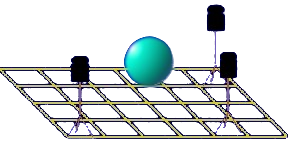

3-D Visualization of Tomographic Models: Data as displayed in the CUBE. the CUBE is a six-wall immersive environment run by the Illinois Simulator Laboratory at the University of Illinois, Beckman Institute. This data shows how sensor data is combined from multiple sensors to determine where an object is in the volume. The region of highest object probability is colored yellow. |

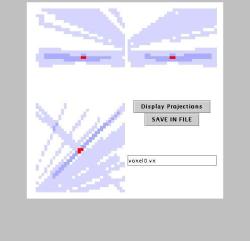

3-D Model Display via Web Interface: Live 3-D data viewed with a Java client. The idea of the software platform was to have a distributed application running on the sensor array. A device that wanted to query the sensor array for information would only need to be capable of running Java since the applet was served by the sensor array itself. These user interface devices would be treated as just another node in the array. | |

Module Comparison: Second generation module designed and constructed at Distant Focus next to the first generation modules designed and constructed at the University of Illinois. Both use 802.11 wireless for communication but that's about all that's similar. The second generation modules are considerably smaller and use less power than the first generation module. This was accomplished through the use of a StrongARM processor as opposed to the standard x86 architecture. The second generation module is designed to be more modular and accept a wide variety of different kinds of sensors. In a sense it is a generic sensor platform that provides power management, processing, and communications. First generation modules were designed with only the tomographic application in mind and were thus somewhat limited to several cameras for sensors and had poor battery lifetimes. | ||